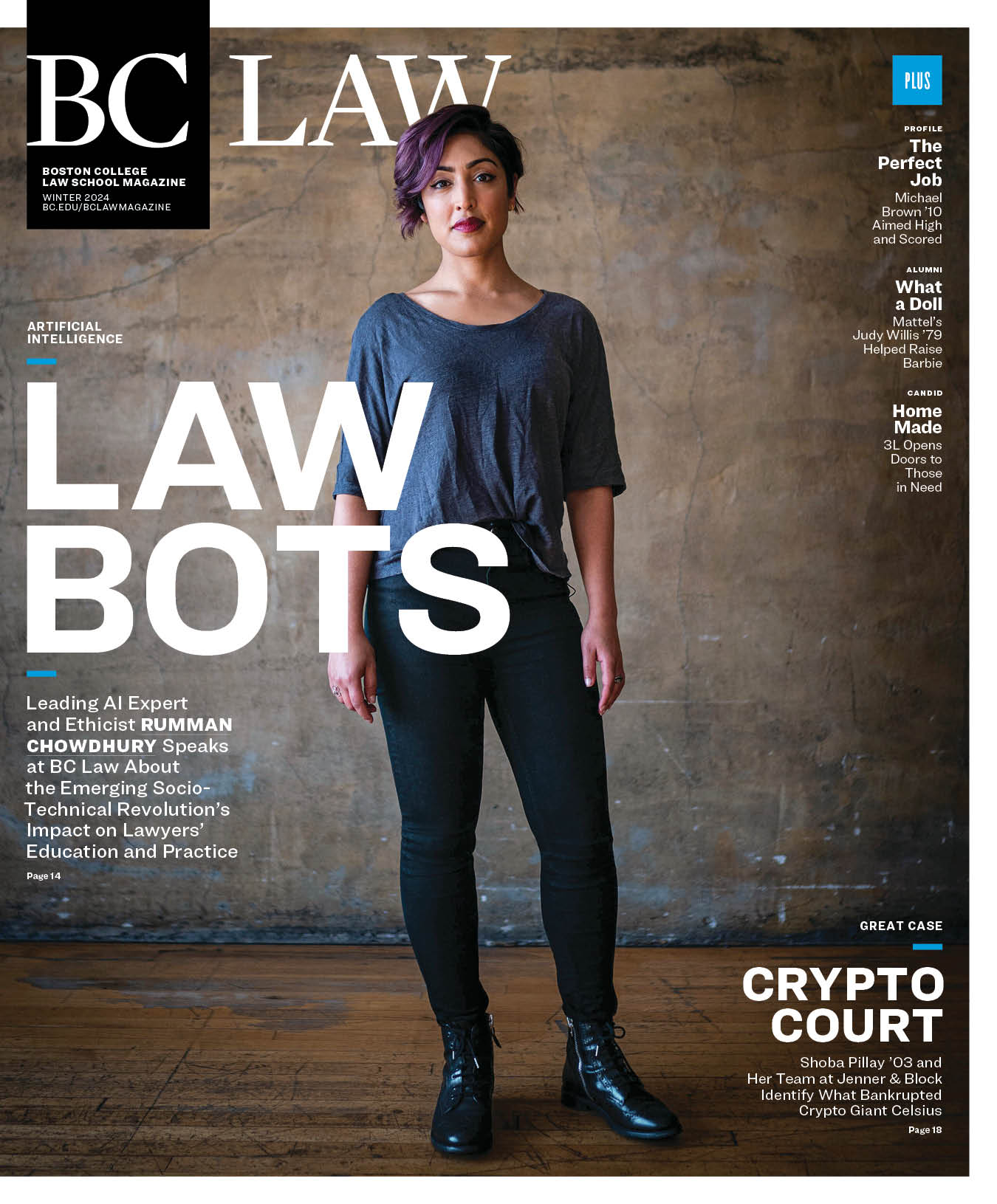

Rumman Chowdhury, PhD, is on the cutting-edge of “social-technical solutions for ethical, explainable, and transparent AI,” and is widely recognized as the leading voice on managing the potential of artificial intelligence. She attended BC Law’s International IP Summit in October as the keynote speaker and also met with international law expert Dean Odette Lienau. Their conversation ranged from regulating the new algorithmic phenomenon and its global policy challenges to educating lawyers to capably handle and leverage AI for society’s well-being.

RC: Artificial intelligence refers to a very narrow set of technologies, but the term has become used for so many things. Generative AI is a new class of models that takes an input, usually in a text-based format, and generates something based on what it’s being told. The newer wave of it is multimodal, meaning you can give text, audio, video, image, and it can output text, audio, video, and image. The best explanation I’ve ever heard of it was actually by Neil Gaiman, the sci-fi author, and he said it is truth-shaped information. It’s not necessarily factual by definition. It is just very realistic sounding. The verification actually happens later. That can cause real problems, as we have seen.

It’s interesting from a legal perspective, because right now my world [applied algorithmic ethics] is embroiled in thinking through regulation, thinking through standards. We do not have standardized ways of even doing testing or auditing. One reason I have my nonprofit is to create a centralized community, a platform where people can go and also design education around algorithmic auditing. There are laws passing to mandate auditing: in Europe, the Digital Services Act and EU AI Act; in the US, New York City’s AI Bias Audit Law and the Colorado Privacy Act.

OL: Thinking about auditing, I go immediately to accounting, which is another area in which people call for improved regulation and standardization—for tax purposes, company books, and so on—including across national jurisdictions. Well, how do you standardize these approaches? You have complex links between the official laws and the regulatory bodies, and questions about even the definition of what is being audited. Depending on how you define the audit target, you’re going to end up with very different outcomes and even different processes for how you would conduct the audit itself.

RC: In trying to resolve those kinds of issues, we draw a lot from privacy. Explaining what we do and even helping to write legislation around what we do is very difficult. The EU Digital Services Act tries to get at these questions by, for example, mandating that all major platform companies that impact more than a certain number of European citizens audit their models for impact on free and fair elections, violation of fundamental human rights, mental health and well-being, and gender-based violence. But just trying to audit and regulate for things like fundamental human rights is very, very difficult.

“I often think that the field of responsible use can hyperfocus on preventing bad things, which is very necessary. But preventing bad things does not immediately mean that you are creating good things. There needs to be a focus on creating good.”

Rumman Chowdhury

OL: I agree. If you’re thinking about levels of regulation, you also could have global governance that then is enforced at a national level. There are various models along those lines, such as human rights covenants that countries enforce in differential ways—or don’t enforce, as the case may be—for example, with criminal or civil sanctions. So, you could establish a range of rights or regulations or audit expectations in this area, maybe set according to international guidelines or a treaty. Then governments could take measures to embed them into national law. In other cases, governments, through court doctrines or broader legislative approaches, could have the rules automatically come into force without requiring additional steps for enforcement.

RC: In addition to those strategic regulatory efforts, I hope that, built into a lot of the investments and the attention that’s being paid to AI models, is an intentionality towards solving big problems. I often think that the field of responsible use can hyperfocus on preventing bad things, which is very necessary. But preventing bad things does not immediately mean that you are creating good things. There needs to be a focus on creating good.

OL: That is so true. And it is absolutely certain that AI is going to shift the way lawyers work—and the way law students learn—and that can be distressing. But it is also certain that the world needs more legal services. I believe that you’re going to need that human hand and human intelligence to guide what AI is, even if it becomes a powerful tool. I’m hopeful that, if we can train lawyers to engage with this area in an ethical, thoughtful, and strategic way, it could help us to solve some of the access-to-justice problems that are present in so many parts of the law. That’s my message of hope and my goal for now.

For more in the online magazine on Dean Lienau’s plans for AI education at BC Law, see Behind the Columns, and for more on Chowdhury’s activities during the International IP Summit, see In Brief.